I am constantly learning as technology advances, focusing on recognizing the value of data and increasing my data literacy to stay "data-driven".

Project

Telecom Operators

Staff at a telecommunications company want to leverage autonomous sensor devices to automatically identify areas requiring maintenance and highlight opportunities for building out the network based on power usage.

Details

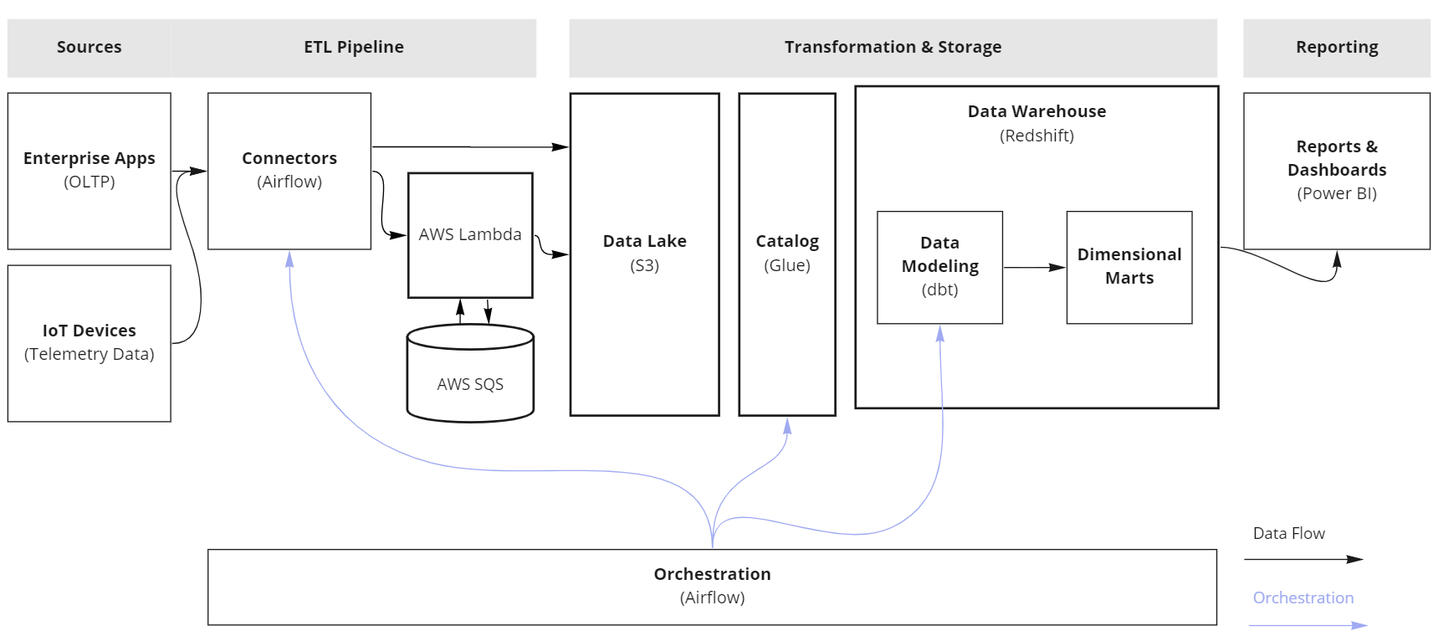

Sources

Telemetry data points from sensors were designated as the primary raw data source for tracking and building business metrics, whereas operational data representing different models and entities, supplied the essential dimensions for reporting.

ETL Pipeline

- Instrumentation, creating custom python SDK that interfaces with the proprietary communication protocols and orchestrating processes in airflow DAGs that consolidates data points into files and sends them to the ingestion point (S3).

- Collection, once files have landed in an S3 bucket, ingestion into another bucket takes place after triggering an event that's handled by AWS Lambda-SQS which provides a scalable solution for light pre-processing and cleaning data.

- Integration, adding data from another source, like OLTP data using airflow operators in order to augment our primary data regularly.

Transformation & Storage

- Data Lake provides a place to permanently store our data in parquet format. It's manually partitioned and structured for optimized Redshift spectrum queries as external tables.

- Data Warehouse accommodates a staging area for a standard way of preparing models. And marts area that contains business-centric models. All transformations are run and documented by DBT.

- Data Catalog gets regularly updated whenever new data lands in S3 or new partitions are created. Provides the main discovery entry point over the data lake.

Reporting

Power BI was the main tool for dashboarding and analysis.